Probability and statistics form the foundation of data analysis‚ enabling us to make informed decisions under uncertainty. These disciplines are crucial in various fields‚ providing tools for modeling and interpreting data.

1.1. Definition and Importance of Probability and Statistics

Probability and statistics are fundamental disciplines that deal with uncertainty and data analysis. Probability focuses on the likelihood of events‚ using concepts like probability density functions (PDFs) to model uncertainties. Statistics involves the collection‚ analysis‚ and interpretation of data to draw meaningful conclusions. Both fields are essential for making informed decisions in the face of uncertainty.

The importance of probability lies in its ability to quantify uncertainty‚ enabling predictions and risk assessments. Statistics‚ on the other hand‚ provides tools for extracting insights from data‚ making it indispensable in scientific research‚ engineering‚ and economics. Together‚ they form the backbone of data-driven decision-making across various industries.

Understanding these concepts is crucial for applications in real-world scenarios‚ such as quality control‚ machine learning‚ and economic forecasting. They empower professionals to model complex systems‚ analyze patterns‚ and make data-informed decisions.

1.2. Key Concepts in Probability Theory

Probability theory revolves around the study of chance events and their likelihood. Core concepts include probability density functions (PDFs)‚ which describe the likelihood of continuous outcomes‚ and probability mass functions (PMFs) for discrete outcomes. Events and sample spaces are fundamental‚ defining possible outcomes and their occurrences. Key ideas like independence‚ conditional probability‚ and Bayes’ theorem are essential for understanding relationships between events.

Probability distributions‚ such as the binomial and normal distributions‚ model real-world phenomena. Measures like expected value and variance quantify central tendency and dispersion. These concepts form the foundation for analyzing uncertainty and making probabilistic predictions.

Mastering these principles is vital for applications in engineering‚ economics‚ and computer science‚ where probabilistic modeling is crucial. They enable professionals to assess risks‚ simulate systems‚ and optimize decision-making processes effectively.

1.3. Key Concepts in Statistics

Statistics involves the collection‚ analysis‚ interpretation‚ and presentation of data. Descriptive statistics focuses on summarizing data through measures like mean‚ median‚ and standard deviation‚ while inferential statistics draws conclusions about populations from sample data. Key concepts include probability density functions (PDFs) and t-distributions‚ which are essential for hypothesis testing and confidence intervals. Statistical methods like regression analysis help identify relationships between variables‚ enabling predictions and trend analysis. The use of statistical software facilitates complex calculations and data visualization‚ making it easier to derive meaningful insights. These tools are indispensable in various fields‚ from economics to computer science‚ for making data-driven decisions and solving real-world problems effectively.

Probability Theory

Probability theory explores the likelihood of events‚ using concepts like probability density functions (PDFs) and distributions to model uncertainty. It provides tools for predicting outcomes and making informed decisions.

2.1. Fundamental Concepts of Probability

Probability theory begins with basic concepts such as probability density functions (PDFs) and probability mass functions (PMFs)‚ which describe the likelihood of events. A probability is a numerical measure between 0 and 1‚ representing the chance of an event occurring. Events are outcomes of interest‚ and their probabilities must satisfy axioms of non-negativity‚ unitarity‚ and additivity. Random variables‚ which can be discrete or continuous‚ are central to modeling uncertainty. Distributions‚ such as the normal or binomial distribution‚ define how probabilities are assigned. Conditional probability‚ introduced by Bayes’ theorem‚ allows updating probabilities based on new information. Key concepts also include independence‚ expectation‚ and variance‚ which quantify the central tendency and spread of random variables. Understanding these fundamentals is essential for applying probability theory in real-world scenarios‚ from engineering to economics‚ and for building a foundation in advanced statistical analysis.

2.2. Probability Distributions

Probability distributions are mathematical functions that describe the likelihood of different outcomes in an experiment. For discrete random variables‚ common distributions include the Bernoulli‚ Binomial‚ and Poisson distributions‚ each modeling specific types of events. Continuous distributions‚ such as the Normal (Gaussian) and Uniform distributions‚ describe probabilities over an interval. Key parameters of distributions‚ like the mean and variance‚ summarize their central tendency and spread. Distributions are essential for modeling real-world phenomena‚ such as the time until failure in engineering or the number of customers arriving in a queue. Understanding these distributions is crucial for hypothesis testing‚ confidence intervals‚ and predictive modeling. Many textbooks and resources provide detailed explanations‚ enabling learners to apply these concepts in various fields‚ from economics to computer science. Mastery of probability distributions is a cornerstone of statistical analysis and decision-making under uncertainty.

2.3. Applications of Probability in Real-World Scenarios

Probability theory has vast applications in real-world scenarios‚ enabling decision-making under uncertainty. In engineering‚ probability is used to assess the reliability of systems and predict failure rates. Economists apply probability to model market risks and forecast economic trends. In computer science‚ probabilistic algorithms are essential for machine learning and data analysis. The insurance industry relies heavily on probability to calculate risks and determine premiums. Medical researchers use probability to analyze clinical trial outcomes and understand disease spread. Even in everyday life‚ probability helps in making informed decisions‚ such as predicting weather patterns or optimizing resource allocation. These applications highlight the versatility of probability in solving complex problems across diverse fields. By understanding probability‚ professionals can make data-driven decisions‚ reducing uncertainty and improving outcomes in their respective domains.

Statistics

Statistics involves the collection‚ analysis‚ and interpretation of data to draw meaningful conclusions. It is essential for understanding patterns‚ trends‚ and relationships‚ enabling informed decision-making in various fields.

3.1. Descriptive Statistics

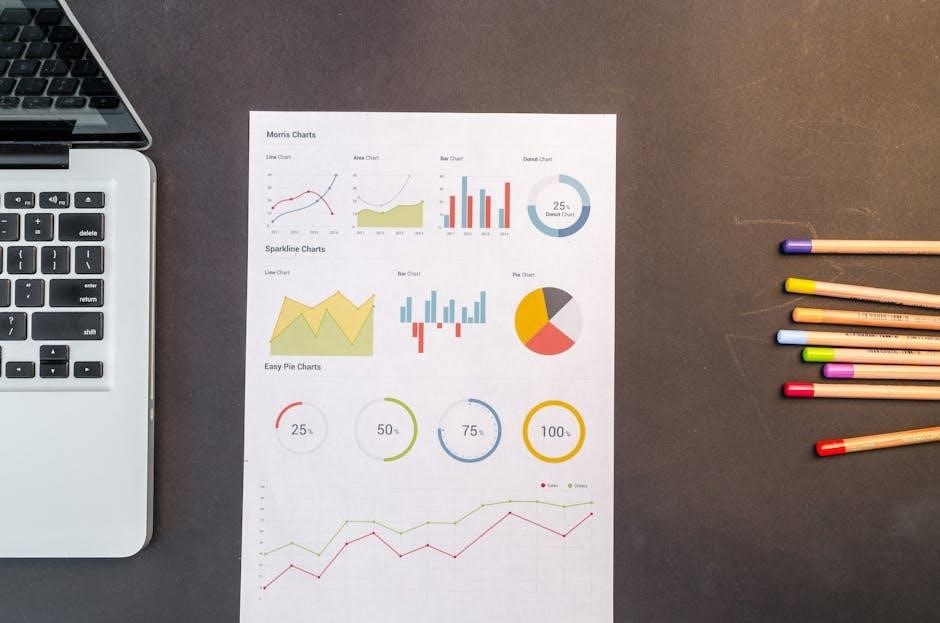

Descriptive statistics focuses on summarizing and describing the main features of a dataset. It involves calculating measures such as mean‚ median‚ mode‚ range‚ and standard deviation to understand data distribution and variability. This branch of statistics also includes data visualization techniques like histograms‚ bar charts‚ and box plots to present data in an interpretable form. Descriptive statistics is essential for initial data exploration‚ identifying patterns‚ and cleaning data before applying advanced analytical methods. It provides a foundation for further statistical analysis by simplifying complex datasets into meaningful summaries. Tools like probability density functions (PDFs) are often used to describe continuous distributions‚ aiding in understanding the likelihood of data points within specific ranges. By organizing and summarizing data‚ descriptive statistics facilitates informed decision-making across various fields‚ including engineering‚ economics‚ and computer science.

3.2. Inferential Statistics

Inferential statistics involves drawing conclusions or making predictions about a population based on sample data. It uses probability theory to make inferences‚ often through hypothesis testing‚ confidence intervals‚ and regression analysis. Key methods include t-tests‚ Z-tests‚ and chi-square tests‚ which help determine whether observed patterns are statistically significant. Inferential statistics also relies on probability distributions‚ such as the normal distribution‚ to model data and calculate probabilities. Techniques like ANOVA and correlation analysis are widely applied to understand relationships between variables. This branch of statistics is essential in research‚ enabling researchers to generalize findings beyond the sample data. By applying inferential methods‚ professionals in fields like medicine‚ engineering‚ and social sciences can make data-driven decisions with quantifiable confidence levels‚ ensuring robust and reliable outcomes.

3.3. Applications of Statistics in Data Analysis

Statistics plays a pivotal role in data analysis by providing methods to extract insights and patterns from datasets. It enables professionals to make data-driven decisions‚ predict trends‚ and solve complex problems across various industries. Techniques such as regression analysis‚ hypothesis testing‚ and confidence intervals are widely used to analyze and interpret data. Statistical tools also help in identifying correlations‚ causations‚ and anomalies‚ which are critical in fields like business‚ healthcare‚ and engineering. Additionally‚ statistics supports machine learning by providing foundational concepts like probability distributions and inference‚ which are essential for model development. By applying statistical methods‚ analysts can transform raw data into meaningful information‚ driving informed decision-making and innovation.

Applications of Probability and Statistics

Probability and statistics are essential tools in engineering‚ economics‚ and computer science‚ enabling problem-solving‚ forecasting‚ and system optimization. They provide frameworks for data-driven decision-making across diverse industries.

4.1. Engineering Applications

Probability and statistics play a pivotal role in engineering‚ enabling the modeling and analysis of complex systems. Engineers use probability to assess system reliability‚ predict failure rates‚ and optimize designs under uncertainty. Statistical methods are employed to analyze experimental data‚ ensuring the safety and efficiency of engineering solutions. For instance‚ in reliability engineering‚ probability distributions like the Weibull distribution are used to model component failures. In signal processing‚ statistical techniques help filter noise and improve data accuracy. Additionally‚ statistical process control ensures manufacturing processes remain within specified limits‚ reducing variability. These tools are indispensable in fields such as civil engineering for structural analysis and in aerospace engineering for risk assessment. By leveraging probability and statistics‚ engineers can make data-driven decisions‚ enhancing the performance and reliability of their designs.

4.2. Economic Applications

Probability and statistics are integral to economic analysis‚ enabling researchers and policymakers to model and predict economic phenomena. Statistical methods are used to analyze data on income‚ employment‚ and inflation‚ informing policy decisions. Probability theory helps economists understand uncertainty in markets‚ such as stock price fluctuations or consumer behavior. Techniques like regression analysis and hypothesis testing are essential for identifying trends and causal relationships in economic data. Additionally‚ probabilistic models are used in risk assessment for investments and financial portfolios. In macroeconomics‚ statistics guide forecasting tools‚ while in microeconomics‚ they help analyze consumer choice and market dynamics. These tools are also applied in econometrics‚ where statistical models are developed to estimate economic relationships. By leveraging probability and statistics‚ economists can make data-driven decisions‚ contributing to sustainable economic growth and stability.

4.3. Applications in Computer Science

Probability and statistics play a pivotal role in computer science‚ driving advancements in machine learning‚ artificial intelligence‚ and data analysis. Probabilistic models are essential for tasks like risk assessment‚ decision-making under uncertainty‚ and predictive analytics. In machine learning‚ statistical techniques such as regression‚ classification‚ and clustering enable algorithms to learn from data. Probability theory underpins Bayesian networks‚ used for reasoning and inference in complex systems. Statistics are crucial in data mining‚ helping identify patterns and relationships within large datasets. Computer vision relies on probabilistic methods for image recognition and processing. Additionally‚ statistical analysis is used to optimize algorithms‚ improve computational efficiency‚ and validate results through hypothesis testing. These applications highlight the importance of probability and statistics in shaping modern computing technologies and solving real-world problems effectively.

Tools and Software for Probability and Statistics

Statistical software like R‚ Python‚ and MATLAB are essential for data analysis‚ enabling computations in probability and statistics. These tools facilitate modeling‚ simulations‚ and visualizations‚ enhancing problem-solving capabilities.

5.1. Statistical Packages and Their Uses

Statistical packages such as R‚ Python libraries (e.g.‚ NumPy‚ pandas‚ and statsmodels)‚ and MATLAB are widely used for data analysis and statistical computations. These tools provide robust functionalities for descriptive statistics‚ hypothesis testing‚ and advanced modeling. For instance‚ R is renowned for its extensive libraries like dplyr and ggplot2‚ which simplify data manipulation and visualization. Python’s scikit-learn is instrumental in machine learning applications‚ bridging probability and statistics with real-world problems. MATLAB‚ on the other hand‚ excels in numerical computations and simulations‚ making it a favorite in engineering and scientific research. These packages also support probability calculations‚ such as computing probabilities for normal distributions or performing t-tests. Their versatility allows users to handle complex datasets‚ making them indispensable in academia‚ research‚ and industry. By leveraging these tools‚ professionals can efficiently analyze data‚ model systems‚ and draw meaningful insights‚ fostering informed decision-making across diverse fields.

5.2. Software Tools for Probability Calculations

Software tools like Python’s SciPy and NumPy are essential for probability calculations‚ offering modules for statistical distributions and random number generation. R’s stats package provides functions for probability density functions (PDFs) and cumulative distribution functions (CDFs) for distributions like normal‚ binomial‚ and Poisson. MATLAB’s Statistics and Machine Learning Toolbox includes tools for hypothesis testing and probability modeling. Specialized tools like WinBUGS and OpenBUGS are used for Bayesian probability calculations‚ enabling complex probabilistic modeling. These tools also support simulations‚ allowing users to generate synthetic data for experimentation. Additionally‚ libraries like PyMC and Stan facilitate Bayesian inference‚ making them popular in modern probabilistic analysis. These software tools are invaluable for both educational purposes and real-world applications‚ enabling precise and efficient probability calculations across various fields.

5.3. Case Studies Using Statistical Software

Case studies demonstrate the practical application of statistical software in real-world scenarios. For instance‚ in finance‚ R’s stats package was used to analyze stock market trends‚ employing linear regression and hypothesis testing. Python’s Pandas and SciPy libraries were utilized in healthcare to model patient outcomes‚ identifying key predictors of disease progression. In engineering‚ MATLAB’s Statistics Toolbox optimized manufacturing processes by applying probability distributions to quality control data. These tools enabled data visualization‚ hypothesis testing‚ and predictive modeling. A case study in economics used STATA to evaluate the impact of policy changes on employment rates‚ leveraging regression analysis. Such examples highlight how statistical software empowers data-driven decision-making across industries. These real-world applications underscore the importance of mastering these tools for solving complex problems and extracting actionable insights from data.

Learning Resources

Recommended textbooks include works by GJ Kerns and MJ Evans‚ offering comprehensive introductions to probability and statistics. Online courses like Stanford’s CS 109 provide practical learning opportunities. Utilize statistical packages such as R‚ Python libraries‚ MATLAB‚ and STATA for hands-on experience.

6.1. Recommended Textbooks on Probability and Statistics

6.2. Online Courses for Learning Probability and Statistics

Online courses provide flexible and accessible ways to learn probability and statistics. Platforms like Coursera and edX offer courses from top universities‚ such as Stanford’s CS 109: Probability for Computer Scientists. These courses often include video lectures‚ quizzes‚ and hands-on projects‚ enabling learners to apply concepts practically. Some courses‚ like those based on CSE 312‚ focus on foundational topics‚ making them ideal for beginners. Advanced courses delve into machine learning and data analysis‚ connecting probability and statistics to real-world applications. Many online courses are self-paced‚ allowing learners to progress at their convenience. They also incorporate discussion forums for interaction with peers and instructors. These resources are particularly useful for students and professionals seeking to enhance their analytical skills without traditional classroom constraints. Online courses are a valuable complement to textbooks‚ offering dynamic learning experiences.

6.3. Practical Tips for Mastering Probability and Statistics

Mastery of probability and statistics requires consistent practice and a deep understanding of foundational concepts. Start by solving basic probability problems to build intuition‚ gradually progressing to more complex scenarios. Focus on understanding distributions‚ such as the normal‚ binomial‚ and Poisson distributions‚ as they are fundamental to both probability and statistics. When working on statistical problems‚ always begin by summarizing your data using descriptive statistics. Practice hypothesis testing and confidence interval calculations to grasp inferential statistics. Utilize software tools like R or Python for hands-on experience with data analysis. Regularly review and apply concepts to real-world problems‚ reinforcing your understanding. Be meticulous with calculations and avoid rounding prematurely. Lastly‚ engage with study groups or forums to discuss challenging topics and learn from others’ perspectives. These practical steps will help solidify your knowledge and improve your problem-solving skills.

Advanced Topics in Probability and Statistics

Advanced topics explore complex probability distributions‚ stochastic processes‚ and cutting-edge statistical methods. These concepts are essential for tackling modern challenges in machine learning‚ big data‚ and advanced data analysis.

7.1. Machine Learning and Its Connection to Probability

Machine learning heavily relies on probability theory to model uncertainty and make data-driven decisions. Key concepts like probability distributions‚ Bayes’ theorem‚ and likelihood functions are foundational. Supervised learning uses probability to predict outcomes‚ while unsupervised learning identifies patterns in data. Neural networks utilize probability distributions to optimize weights and biases. Applications include classification‚ regression‚ and clustering tasks. Probability theory enables machine learning algorithms to handle real-world complexities‚ such as noise and missing data. Advanced techniques like Bayesian networks and Markov chains further extend these capabilities. By integrating probability‚ machine learning models can generalize from training data to unseen scenarios‚ making them powerful tools in artificial intelligence and data science.

7.2. Big Data and Statistical Analysis

Big data has revolutionized the way we approach statistical analysis‚ enabling the processing of vast datasets to uncover patterns and insights. Statistical methods are essential for extracting meaningful information from large-scale data‚ often characterized by high volume‚ variety‚ and velocity. Techniques like hypothesis testing‚ regression analysis‚ and machine learning algorithms are widely applied to analyze big data. Tools such as Hadoop‚ Spark‚ and Python libraries like pandas and NumPy facilitate efficient data processing. Statistical models help in predicting trends‚ identifying correlations‚ and making informed decisions. The integration of probability theory with big data analysis further enhances the ability to handle uncertainty and variability in complex datasets. As big data continues to grow‚ advanced statistical methods play a pivotal role in deriving actionable insights and driving decision-making across industries.